What do Thai, Python, and Waylay’s rules engine have in common? At first glance - nothing. One is a spoken language, one is for writing software, and one powers automation in digital and physical systems. But dig deeper, and they’re all solving the same problem: how to express ideas clearly, efficiently, and within our cognitive limits.

A 2019 study published in Science Advances explored how human languages, despite vast differences in sound and structure - transmit information at surprisingly similar rates: about 39 bits per second. This happens through a fascinating trade-off. Languages like Thai are spoken more slowly but pack more meaning per syllable. Others, like Spanish, are spoken quickly but each syllable carries less information.

So what does this mean? Essentially, languages trade off speed and information density to hit a kind of “sweet spot” for communication efficiency. Thai speakers may say fewer syllables per second, but each one is packed with meaning. Spanish speakers may speak more quickly, but with simpler syllables. In both cases, the overall flow of information is nearly identical. In the case of Thai, this means it’s a dense and deliberate language, proving that efficiency in communication isn’t about talking fast - it’s about saying just enough, just right. The result? They balance out.

All languages adapt to a “sweet spot” of information efficiency, shaped by how much our brains can comfortably handle in real-time.

Programming Languages: The Same Efficiency Trade-offs

Programming languages also juggle speed and information density. Take for instance this picture, which measures the entropy of programming languages (analysis done Deedy Das @deedydas):

Python, like Thai, has minimal syntax and it is highly expressive. You get more meaning per line. Java is more like Spanish, it is quick to write, widely used, but often less compact or formal.

Still, we all know that no language is “best.” Each is optimized for its use case and audience. Just like human speech, programming languages evolve to match the needs and constraints of their users.

LLM and Human communication

Explaining Logic vs. Implementing Logic

When an LLM conveys intent purely through code, it faces the challenge of formal precision. That means it must generate syntactically correct and executable code, whether in Python, JavaScript or any other language that captures the exact logic without ambiguity. While this kind of output is ideal for machines, it often lacks readability for humans unfamiliar with the underlying syntax. It's akin to writing a mathematical formula: precise and logically sound, but not always intuitive.

In contrast, when an LLM uses natural language to express logic, the goal shifts from precision to human understanding. It relies on contextual cues, metaphor, and narrative flow - much like how we explain complex ideas to non-experts. This approach aligns closely with how platforms like Waylay present logic - a powerful low-code/no-code platform for creating smart workflows, automations, and decisions.

Waylay abstracts away the complexity of traditional programming but doesn’t sacrifice power of logic or precision. You “drag and drop” sensor nodes, add logical gates, or describe the logic via JSON DAG - with the goal to represent the intent via conditions, sensors, and actuations - in short, condensing the information flow. Each node is dense with meaning, much like a syllable in Thai or a Python one-liner. For business users, they can express themselves concise - like speaking Thai, while developers can dive deeper, adding scripts or APIs - like writing C.

Waylay gives you information throughput without overwhelming the user, making it cognitively efficient by design.

Why This Matters

Whether you're coding, configuring, or conversing, you're operating under the same constraint: the human brain can only process so much, so clearly, at once. Waylay, like natural and programming languages, is built with this in mind:

- It favors clarity over clutter.

- It lets you move fast without losing meaning.

- It gives experts and non-experts alike the tools to create complex systems efficiently.

The Trade-Off: Cognitive vs. Computational Optimization

Natural language explanations are optimized for humans - fuzzy, flexible, but efficient for shared understanding. On the other hand, code representations are optimized for machines - precise, rigid, and executable, but cognitively denser for people.

This mirrors the study's findings about speech rate vs. information density in human languages. The LLM must choose whether to: maximize cognitive clarity (like slow, dense Thai) or maximize computational clarity (like writing code):

What This Means for LLMs in Practice

When LLMs serve as bridges between humans and machines like in Waylay’s case, they need to fluidly shift between natural language for explanation and collaboration and formal logic/code for execution and integration. This is especially important in low-code/no-code environments like Waylay, where the user might need human-friendly rule summaries for auditing or governance but also needs machine-executable logic for automation. So a great LLM experience isn’t just generating code - it is translating intention into implementation, then explaining that implementation back to the user.

The Future: Language as the Interface

The ultimate goal for LLMs in logic systems is to make code as transparent and expressive as language, and make language as precise as code, when needed. LLMs are not just code writers - they are translators between thought and execution, adapting their “language” depending on who’s listening: a human or a machine.

Seeing is believing

Visual and natural-language representations make logic transparent, relatable, and easy to verify. They empower both technical and non-technical users to quickly understand system behavior, fostering trust and speeding up collaboration. And now, let’s shift from theory to practice.

What we’ve seen in recent experiments with Waylay MCP - powered by the Claude reasoning engine, takes this to an entirely new level. With just a few examples, screenshots, and brief commentary for each use case, you’ll see exactly what we mean. Spoiler: the results are nothing short of astonishing.

Fixing the Waylay template - finding the logic flow

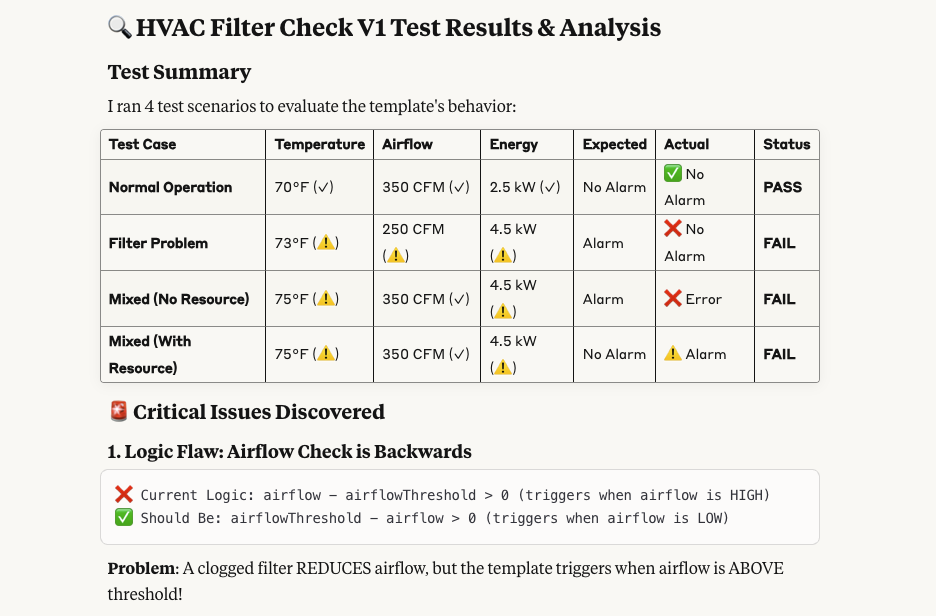

Claude finding a logical flow in the Waylay template (when HVAC filter is clogged, the airflow is dropping not increasing!)

And then fixing it and on top, validating the fix!

Bonus it went further and improved the template which looked like this:

And Claude made this one, adding few more things to check!

Refining the logic based on the most recent advancements in publicly available knowledge

This is truly mind-blowing. Claude was given this template to detect septic shock by analyzing patients vital signs:

This is what I have asked, “any suggestions for the improvement”?

And this is what I got, a new template, based on the latest state of the art papers!

And finally testing a new template with data sets for different conditions:

Or how about compiling all of that in one python library and testing it again? No problem! New ML sensor deployed in Waylay and tested!

What does it all mean?

We’ve moved beyond the days of patiently waiting for compilers to run. Today, machines handle everything - guided only by human intent, while Waylay seamlessly manages the rest.

So, where does that leave humans? Or coders, if you prefer? Honestly, it’s a bit uncertain. We’ll definitely need experts to define what aspects of the intent to automate and monitor. But when it comes to implementation, automation, diagnosing machines, and even suggesting repair actions - at least within the Waylay ecosystem, most of the heavy lifting is already done. It just works.

No more excuses for dragging out predictive and preventive maintenance projects for months or years. Just plug in Waylay, and the rest becomes a breeze. You can kick back with a coffee and chat or type to your favourite LLM, while the Waylay system takes care of everything else.